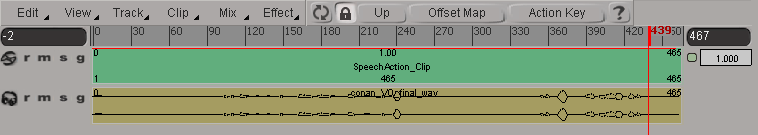

Lip-sync animation is implemented as a special type of action clip (the Speech Action clip) in the mixer, but in every other respect it acts like a normal action clip and can be treated as one as far as mixing goes.

You can blend the weight of lip-sync animation in the speech clip with motion capture and/or keyframe animation that is on the same animation controls.

You can add action clips of head movements, eye blinking, etc. to the animation mixer on different tracks, then have that animation running at the same time as the lip-sync animation that's in the Speech Action clip.

If you want to blend lip-sync animation with mocap data, it's best to do the lip sync first. Then when you add the mocap, its data is driven by the retargeting operators so that it's still "live". This allows you to adjust the mocap using the options in the Adjust panel (see Adjusting the Retargeted Mocap Data) while editing the lip sync at the same time.The different types of data will each affect the animation controls as they should. You can then control the amount that each type of animation contributes to the result.

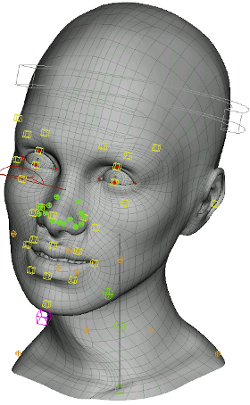

In the image below, mocap animation on head is blended with lip-sync animation on the mouth controls.

The mocap and the lip-sync animation each contribute equally to the result, but what you usually want is to use the mouth animation from one source and the jaw animation from the other. To do this, you can have either source controlling the animation as you like:

To have the lip-sync animation drive the animation, you can open the Adjust panel and reduce the weight of the Upper Lip, Lower Lip, and Jaw channels in the Global Controls group. Setting these values to zero means that the lip-sync operator (SpeechBlend) is the only thing affecting those animation controls. Of course, you can set these values to any number and even animate them.

To have the mocap drive the animation, you can reduce the weight of the Lip, Jaw, and Tongue channels in the Speech Blend property editor (see Weighting the Lips, Jaw, and Tongue Globally). As with the Adjust controls, you can set these values to any number you like and animate them.

You may also choose to use only parts of the lip sync with the mocap. For instance, you can disable the lips and jaw channels of the lip sync so that you are using only the tongue lip-sync animation with the mocap.

You can also decouple the corrective shapes from the lips channel as a way to automate this work (see Decoupling the Corrective Shapes from their Viseme Poses for Mocap Animation).

Once you've finalized the mocap, you can plot it and apply it to the face, which prevents any more adjustments to be made to it (see Plotting (Baking) the Mocap and Keyframe Data).

However, you can then blend the weighting of the mocap clip with the lip-sync animation clip in the mixer. See Mixing and Weighting Clips for more information.

Lip-sync animation works in conjunction with keyframe animation. You can set keys on the animation controls using animation layers (see Animation Layers), store that animation as a clip in the mixer (see Storing Animation in Action Sources), or key the animation controls directly.

To add keyframes on top of the lip-sync animation, add an animation layer and set keyframes in that layer. These are considered to be an offset from the base layer of animation (the lip-sync animation).

If you store the keyframe animation in a clip, you can blend between it and the Speech Action clip as you would normally blend between clips in the mixer - see Mixing and Weighting Clips for more information.

If you key the lip, jaw, or tongue animation controls directly, the speech operator (which drives the Speech action clip) will override this animation because clips in the mixer always override other animation at the same frames. However, you can set the mixer options to allow this clip to blend with the fcurves - see Mixing Fcurves with Action Clips for more information.

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License