The OGL Texture shader defines the image source, projection method and other attributes of a single texture image, but you can also use several OGL Texture nodes together for more complex multi-texturing effects. Each texture is set on a specified target.

| Uniform Name |

If this OpenGL texture shader is connected to a programmable shader that declares sampler types (for example, sampler2D) as uniforms, enter the name of the uniform variable as you defined it in your shader code. |

| Target |

Defines the texture stage for multi-texturing operations in a single draw pass. Each OGL Texture node needs to be bound to a unique texture target. A texture target is a layer in which a texture is set. These layers are then modulated together programmatically via a fragment shader. The number of available texture targets depends on the hardware you are using. |

| Image |

Defines an image clip to use. Click Edit to open the Image Clip Property Editor where you can modify the image clip being presently used. To retrieve a new clip, click New and indicate whether you wish to select a new clip from file or create one from a source. For more information about working with images, see Managing Image Sources & Clips [Texturing]. |

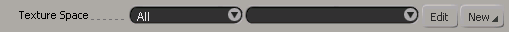

| Texture Space |

Lets you choose a texture projection. If no texture projection has been defined, you can create one by clicking New. If a texture projection is already defined, you can edit it by clicking Edit. When multiple objects with texture projections share the same material, the Texture Space widget includes an object selection list where you can specify the texture projection to be used for a specific object or all of a scene's objects.  Edit: Opens the Texture Projection Property Editor for the selected texture projection. New: Specifies the new texture projection to be created. You can choose from the following:

|

| Border |

Sets the color of the texture image's border. The border is used for clamping purposes as you may want to clamp the texture to a certain portion of the object without having its colors bled onto the rest of the object. When you clamp the texture using its border, portions of the object not covered by the texture use the border color. |

| Modulation |

Determines how the diffuse lighting attribute affects the texture color. The default texture environment is Modulate (GL_MODULATE), which multiplies the texture color by the primitive (or lighting) color. The available texture modulation modes are: (Where f = fragment, t = texture, and c = GL_TEXTURE_ENV_COLOR) The order in which the object is shaded and modulated is as follows:

|

| Compression |

Toggles texture compression on and off. If texture quality is less important (for example, the texture is far away or has a lot of noise already), then you can activate Compression to save texture memory. This transforms your texture to S3TC format which allows it to compress about 4:1 on video memory. |

| Format |

Specifies the image format. Typically, RGB/RGBA is used, although you can specify DSDT (red/green) or DSDT (red/blue) where necessary. For example, you may need a texture to be converted to DSDT format for the purpose of using bump reflection instructions on NV2x based video cards. |