This section discusses some basic concepts that are important to understand throughout the texturing process.

Softimage allows you to use two different types of textures: image textures, which are separate image files applied to an object's surface, and procedural textures, which are calculated mathematically.

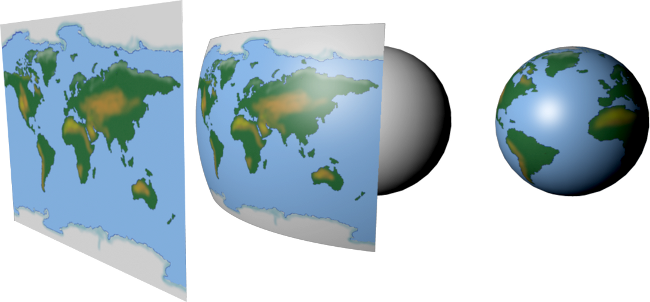

Image textures are 2D images that can be wrapped around an object's surface, much like a sheet of rubber that's wrapped around an object. To use an image texture, you start with any type of picture file (PIC, GIF, TIFF, PSD, DDS, etc.) such as a photo or a file made with a paint program.

Procedural textures are generated mathematically, each according to a particular algorithm. Typically, they are used to for gradients, repeating patterns such as checkerboards, and fractals that mimic natural patterns such as wood, clouds, or marble.

Softimage's shader library contains both 2D and 3D procedural textures. 2D procedural textures are calculated on the object's surface — according to their texture projections — while 3D procedural textures are calculated through the object's volume. In other words, unlike 2D textures, 3D textures are projected "into" objects rather than onto them. This means they can be used to represent substances having internal structure, like the rings and knots of wood.

In Softimage, a texture is more than just an image or a shader. Applying a texture to an object creates a variety of elements that define the texture in the scene and control the way it appears on the object to which it is applied.

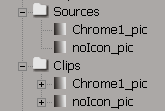

When you apply an image file as a texture, an image source and an instance of the image — called an image clip — are created. For more information on image sources and clips, see Managing Image Sources & Clips [Data Exchange].

Several image clips can be created from the same source. You can then edit each one slightly, according to your needs. For example, if you are using the same picture file (source) for both your surface texture and your bump map, you can apply a clip effect such as a slight blur to the bump clip without affecting the picture being used as a surface texture.

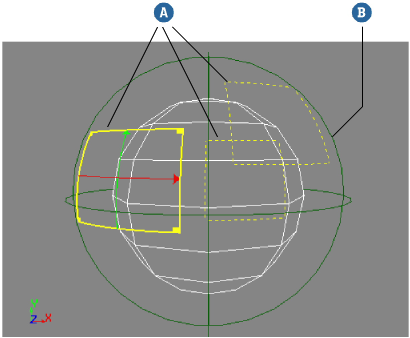

Each texture must be associated to a texture projection. The projection controls how the texture is applied across the surface of an object.

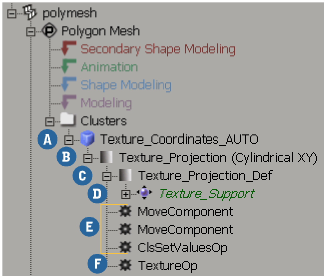

Some types of texture projections also have a texture support. The texture support is a scene object that you can manipulate to modify the projection, for example, to scale and position a label on a bottle. Texture support objects are displayed as a dark green wireframe in the 3D views.

The texturing process works similarly to a slide projector. In this analogy:

Now imagine a slide projector that can project multiple slides simultaneously and precisely control where each one appears on the screen. That's how texturing works in Softimage.

Each object can have multiple projections and supports. Each projection can be associated to only one object and one support, but a single support can be associated with multiple projections on multiple objects.

For example, if you have a model of a letter envelope, you can apply a single planar support to it and use a texture projection for the stamp, another for the address, and yet another for the return address.

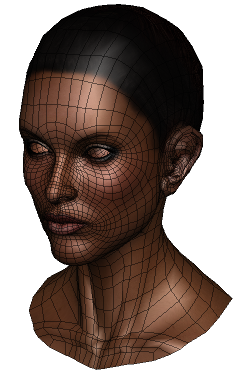

Applying a texture projection to an object creates a set of texture coordinates — often called "UV coordinates" or simply "UVs" — that control which part of the texture corresponds to which part of an object's surface. Each UV pair in a set of coordinates associates a location on an object, called a "sample point", to a location on an image. The texture values for other locations on the surface of the object are interpolated from the surrounding samples.

On a polygon object, the sample points are polygon nodes, or polynodes. There is one polynode for each polygon corner.

On NURBS objects, the sample points are generated based on a regular sampling of the object's surface.

You can view and adjust UV coordinates using the texture editor. See Working with UVs in the Texture Editor.

|

|

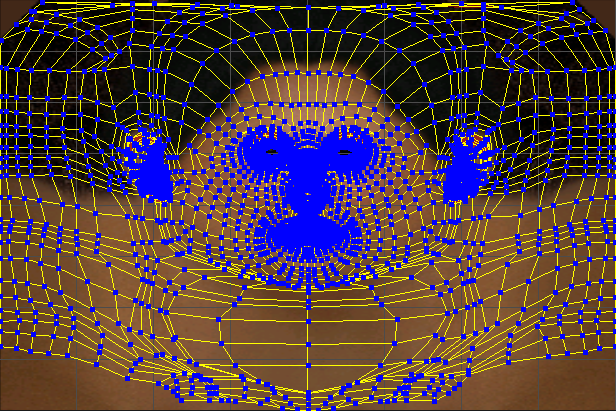

All of the properties associated with a texture projection can be found in an explorer.

Illumination shaders and textures are usually combined to create an object's look. The illumination shader defines the object's surface characteristics such as base color, transparency, refraction, reflectivity, and so on.

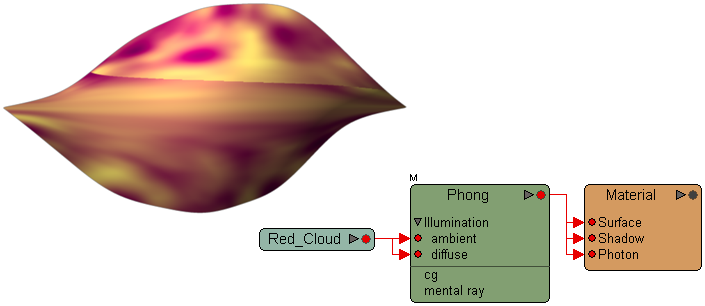

A texture, on the other hand, applies either an image or a procedural texture onto the surface. The texture doesn't "cover" the surface shader; rather, it drives specific shader parameters.

In the following example, the texture is connected to the surface shader's ambient and diffuse parameters only. The Phong shader takes the texture's values as the input diffuse and ambient colors, calculates the lighting in the scene based on its specular and other settings, and outputs the final surface color.

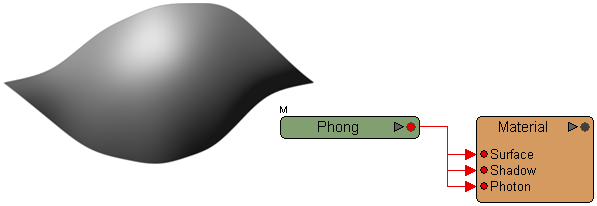

The material node is connected to a Phong surface shader. By default, the surface shader is connected to the surface, shadow, and photon inputs.

A texture (red cloud) is connected to the Ambient and Diffuse parameters of the surface shader. The surface's Ambient and Diffuse values are overridden and output to the material node's surface input, which makes the object display the texture.

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License