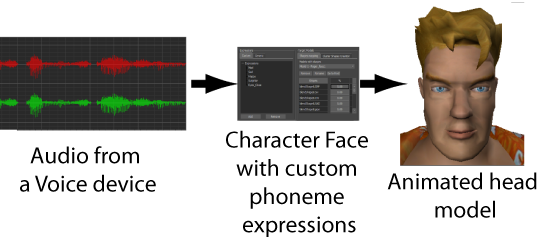

Using a Character Face and the Voice device, you can set up a character to “talk” with an audio file or live audio input as its voice. Through the Voice device, the phonemes in the audio input drive the expressions on the character’s face.

Audio-driven facial animation workflow

To drive facial expressions using audio data:

The shapes on your head model should be appropriate for the number of phonemes you need to make the character convey your audio input, and they should correspond with the sound parameters of the Voice device. See also Phoneme shapes.

When you add additional sound parameters (phoneme sounds) to the Voice device, custom expressions automatically appear in the Expressions pane.

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License

Except where otherwise noted, this work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License