Deprecated

After the declaration is known, the shader must be implemented. This example uses NVIDIA's Cg language, version 1.2. Only a rough outline of a Phong shading material is given, the light shaders are omitted to keep this short.

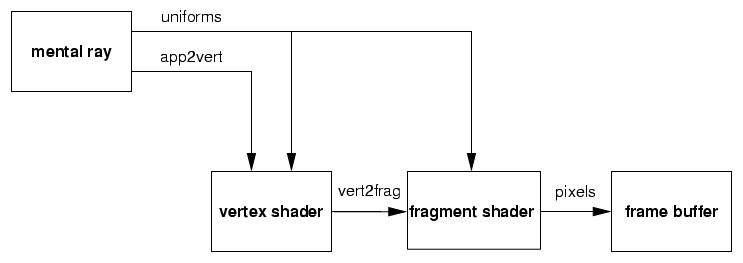

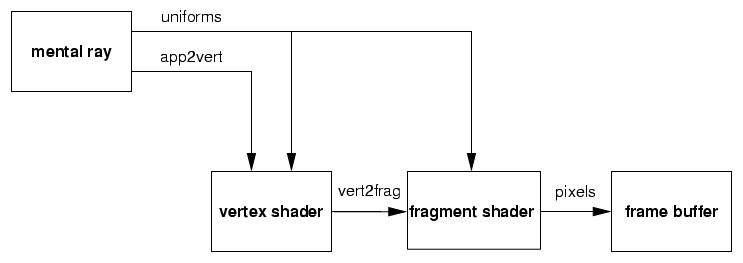

The hardware performs shading in two stages: the vertex shader is run per vertex, and the fragment shader is run per shaded point on the triangle defined by three vertices that have passed through the vertex shader. Here is an overview over the data flow in the example:

mental ray installs the shaders on the graphics board, and provides two sets of information to them: app2vert is a structure that contains information about the current vertex. It is basically equivalent to the miState structure passed to software shaders. mental ray passes it in standard hardware registers, such as POSITION, that are defined by the hardware. (By convention, NVIDIA uses all-capitals names for these registers.) In Cg syntax, this structure is defined as

struct app2vert

{

float4 position : POSITION;

float3 normal : NORMAL;

float2 texCoord0 : TEXCOORD0;

float2 texCoord1 : TEXCOORD1;

float3 derivU : ATTR1;

float3 derivV : ATTR2;

};

The colon notation describes how the fields (left) are mapped to the hardware registers (right). The vertex shader performs some computations and creates a new structure called vert2frag. Since rendering is done per triangle, a fragment shader, which is called for every pixel in that triangle, receives an interpolated vert2frag structure every time it is called for a point in the triangle. In this example, the fields of that structure are declared in Cg as:

struct vert2frag

{

float4 hPosition : POSITION;

float2 texCoord0 : TEXCOORD0;

float2 texCoord1 : TEXCOORD1;

float3 cPosition : TEXCOORD2; // camera-space position

float3 cNormal : TEXCOORD3; // camera-space normal

float3 position : TEXCOORD4; // object-space position

float3 normal : TEXCOORD5; // object-space normal

float3 derivU : TEXCOORD6; // U derivative

float3 derivV : TEXCOORD7; // V derivative

float3 screen : WPOS; // screen-space position

};

This structure contains the projected position, texture coordinates, normals, and derivatives. Again, the named parameters are mapped to a fixed set of all-uppercase hardware registers, such as TEXCOORD0.

Finally, shaders also receive shader parameters. The set of parameters must be declared to mental ray in a shader declaration. Unlike the previous two structure definitions, which are read by the Cg compiler, the parameter declaration uses .mi syntax and is read by mental ray:

declare shader

color "mib_phong_specular" (

color "ambience",

color "ambient",

color "diffuse",

color "specular",

scalar "exponent",

array light "lights",

)

end declare

This declaration is for the shader named mi_phong_specular, which has six parameters. The name will be used by mental ray to locate the Cg shader to load (this will be explained in more detail later). There is no equivalent Cg declaration for the parameter list; instead, Cg expects parameters to be declared with the keyword uniform embedded in the Cg function implementation.

Here is the implementation of the example main vertex shader. The same vertex shader can often be combined with many different fragment shaders to implement a variety of effects, so it is stored in a separate file:

vert2frag main(

app2vert IN,

uniform cgglMatrix mx)

{

vert2frag OUT;

#ifdef PROFILE_ARBVP1

ModelViewProj = glstate.matrix.mvp;

ModelView = glstate.matrix.modelview[0];

ModelViewIT = glstate.matrix.invtrans.modelview[0];

#endif

// copy the object space information

OUT.position = IN.position.xyz;

OUT.normal = IN.normal.xyz;

// set the homogeneous camera-space position of the vertex

// and compute the camera-space position for lighting

OUT.hPosition = mul(mx.ModelViewProj, IN.position);

OUT.cPosition = mul(mx.ModelView, IN.position).xyz;

// make derivative vector in camera space

float3x3 rot = (float3x3)mx.ModelView;

OUT.derivU = mul(rot, IN.derivU).xyz;

OUT.derivV = mul(rot, IN.derivV).xyz;

// transform normal from model space to camera space

OUT.cNormal = normalize(mul((float3x3)mx.ModelViewIT, IN.normal.xyz));

// transfer the texture

OUT.texCoord0 = IN.texCoord0;

OUT.texCoord1 = IN.texCoord1;

return OUT;

}

Each shader is stored in a separate Cg file, and the shader mainline must be named main. Note that the shader is declared to return a vert2frag structure, which is the data later passed to the fragment shader after interpolation.

This is the corresponding fragment shader:

struct mib_illum_phong : miiColor

{

// shader parameter definition, must agree with .mi declaration

miiColor ambience, ambient, diffuse, specular;

miiScalar exponent;

miiLight lights[];

float4 eval(vert2frag p) {

float4 result;

float4 lambience = ambience.eval(p);

float4 lambient = ambient.eval(p);

float4 ldiffuse = diffuse.eval(p);

float4 lspecular = specular.eval(p);

float lexponent = exponent.eval(p);

float3 vdir = normalize(p.cPosition);

result = lambience * lambient;

// Material calculation for each light

for (int i=0; i < lights.length; i++) {

misLightOut light = lights[i].eval(p);

// Lambert's cosine law

result += light.dot_nl * ldiffuse * light.color;

// Phong's cosine power

float s = mi_phong_specular(lexponent, light.dir,

-vdir, p.cNormal);

result += s * lspecular * light.color;

}

return result;

}

};

To keep this example simple, the light shaders have been omitted, and the Cg code of the light_illum and mi_phong_specular functions is not shown. Note the uniform arguments of this function: they correspond exactly to the .mi declaration shown above, with thew mii prefix replacing the mi prefix. Since NVIDIA hardware registers store floating-point values, the integer mode is stored as a floating-point number. Also note that the shader returns a color, indicated by the miiColor return type after the colon.

The Cg 1.2 language simplifies inter-shader communication greatly, which is important for automatic translation of mental ray's Phenomena to Cg shader graphs. The need to explicitly reference sub-shaders for multiple light sources or texture generation is handled automatically. The key function is the eval member function, which plays the same role as the mi_eval functions in mental ray software shaders.

Shader writing for other hardware boards, such as ATI boards, is very similar. The Cg 1.2 compiler can be used with its ARB profile, which generates code that conforms to the OpenGL Architecture Review Board (ARB) baseline definition. (NVIDIA's Cg compiler is not expected to cater to ATI's special extensions.) However, all the components are the same. The forthcoming OpenGL 2 standard will define a shader language equivalent to Cg to avoid having to use the less intuitive assembly language. At this time (March 2004), this new language does not accept interface constructs required for mental ray Phenomena.

Copyright © 1986-2009 by mental images GmbH