This list is a compilation of questions received from programmers using the Maya API. A set of categories has been defined (see the list below), and the questions have been organized into these categories to make the presentation more logical.

The current list of Categories is:

How do I know what units an API method returns?

Unless otherwise specified all API methods use Maya internal units: cm and radians.

Is there a stand-alone mode similar to OpenModel? Or, would a stand-alone be similar to Avid® Softimage® where the basic software package (and license) is required?

Yes. The Setting up your plug-in build environment chapter in the API guide and the documentation on the MLibrary class describe how to set this up and use it. There are also several example stand-alone applications. Descriptions of these can be found in the Example Plug-ins chapter in the API guide.

While OpenModel presents the same API interface as OpenAlias, many of the function calls (even the non-UI ones) work differently or not at all in OpenModel. The lack of render control is a prime example, where some parts of the same function set work and others don’t. This is extremely frustrating, especially since the renderer is a very likely candidate for being done in batch. I cannot tell if the same disparities will show up with Maya in batch and interactive modes, but I will be quite disappointed in they do.

This problem should not exist in the Maya API. Of course, UI calls will not work when run in library mode, but all other calls should behave identically.

The use of angular units is inconsistent. In Maya’s attribute window, transform rotations are given in degrees. However, in the API, attaching to the rotation attributes requires writing out radians. When writing out values for keyframes, we want to be consistent with what we see in the UI.

When dealing with the UI, the API uses the MAngle class. This class contains a method “uiUnit” that returns the unit the user has chosen in the UI. This value is a user preference that can be changed at any time. The MAngle class defaults to radians in all cases so that plug-in code does not have to adjust to the UI preference currently in effect. You can adjust to the units that have been set by the UI user with the following code:

MAngle foo;

foo.setInternalUnit( foo.uiUnit() );

After this, all new MAngle instances will operate in the same units as the UI, until, of course, the UI user changes his/her preferences again.

Additionally, the rotation functions in the transform class deal in doubles that represent radians for efficiency reasons, as that is what the underlying implementation demands. If you are acquiring angular data from the UI, you should access it via the asMAngle method in MArgList and use the asRadians method to extract the value required by the transform class.

We want to modify the blind_data example to add a multidimensional array as a dynamic attribute, but don’t know which MFnAttribute class is the most appropriate for this operation.

However, you can make an attribute, of whatever type, and turn it into an array by calling the setArray method of the MFnAttribute class. If you need a multidimensional array, you will have to build it on top of this using some form of index conversion.

If this is not sufficient, another option is to derive a whole new data type off MPxData that can directly store a multidimensional array. This is more work however, and you will need to implement the readASCII, writeASCII, readBinary, and writeBinary virtual methods derived from MPxData in order for the new data type to save and restore correctly. There are 3 example plugins provided that demonstrate how this is accomplished: blindShortDataCmd.cc, blindDoubleDataCmd.cc and blindComplexDataCmd.cc.

How can we get notified when an attribute of a node changes?

There is a hierarchy of classes rooted at the MMessage class in the API that provide a way for you do register callback functions that will be invoked when a particular Maya event occurs. There is a large set of message that, among other things, allow you to find out when an attribute changes.

How to we bake data via the API?

The “bakeResults” command can be used to bake animation data. All expressions, motionPaths, animCurves, and so on, are replaced in the dependency graph with a single animCurve that will produce the same motion. You can access this functionality via the “MGlobal::executeCommand” method.

As well, the MEL command delete (-ch option) removes construction history for an object. From the API, you will also have to access this via the MGlobal::executeCommand method. Access to this functionality is also available from the UI under Edit > Delete > Construction Historymenu item.

The MFnNurbsSurface::cv() method returns an MObject, but it is unclear what function set can be used to access the returned information. A function set that operates on a class that represents CVs or that operates on MPoints does not exist.

Use the MItSurfaceCV function set. While this might be a little counter-intuitive, the MObject returned by the CV method actually returns a component structure that can contain multiple CVs of an object, and so the CV iterator is required to unpack it and get at the CV data.

When a group node is selected, all objects in the group are highlighted as if they are selected, but the global selection list only has the group node in it. Is this the way it’s supposed to be? It seems to me that everything in the group would be in the list, since they are all selected. (I’m constructing an MItSelectionList from the global active selection list and using MFn::kInvalid as the filter...)

This is indeed the way it works. This is not an issue with the API or MItSelectionList, but rather the way Maya works. You can see identical behavior by starting the Hypergraph ( Window > Hypergraph: Hierarchy) and then:

Notice in the Hypergraph that only the group transform, “group1” is “selected”, even though all the primitives in the group are “highlighted”. As far as Maya is concerned, only the one node is selected, and so that single node is the only one returned by MItSelectionList. You certainly can select the individual primitives in the Hypergraph, or by name using MEL, but the UI only selects the group transform.

However, you can get the list of objects that are “highlighted” when the transform is selected via the API call MGlobal::getHiliteList.

This question concerns simple API array structures, like the MPointArray. Is the data stored in an MPointArray contiguous, or is it stored as a linked list?

In other words, does the append() method just add another element on (as in a linked list) or is it doing the equivalent of a realloc() function (allocates a new contiguous block of data plus one element, and then copies the old data over)?

It is not a linked list, however, neither does it do a realloc on each append (or insert either). Instead, it manages a logical/physical space model, and expands the physical space by a user-configurable number of elements when more is needed.

All the “*Array” should contain the methods “sizeIncrement”, and “setSizeIncrement”. The former tells you by how many elements the array will grow when it needs to, and the later allows you to change that value.

As of Maya 2.0, the constructors for all the array classes accept an initial size parameter. So if you know the size of your array in advance, you can completely avoid any growing/copying overhead in the array.

Why is a new instance of a command created every time it is invoked from the command window? Is this somehow related to Maya’s undo capability? When do these instances of the command object get deleted?

Maya implements its infinite undo capability as follows:

When a command or tool is invoked, the creator function for that object will be called to create a new instance. That instance must contain local data members sufficient to retain state so that when its doIt method is called, it can save enough state to:

Typically, a command’s doIt method will just save the current state of what it is about to change for undo, then cache the parameters of the “about to be performed” operation and call redoIt.

redoIt operates off the cached parameters, and if called from the undo manager, can “redo” the operation without any further user interaction.

undo also operates off the cached data, and can also work without any further user interaction.

Additionally, when a tool is “finished”, its virtual method “finalize” (that is provided in the MPxToolCommand base class) will be called. This routine is responsible for constructing an MArgList containing a command that will “redo” the operation. This command string is written in to the Maya Journal to record all the operations that have taken place.

If the virtual method MPxCommand::isUndoable is overridden and made to return “false” (it defaults in the base class to “true”), then right after the doIt method is called, Maya will call the destructor for the command instance. Otherwise, the instance is passed to the undo manager which will call its undoIt and redoIt members to implement undo and redo requests. When the undo queue is flushed, all the instances of the commands or tools are destroyed, thus freeing the local memory that is caching the parameters needed for undo or redo.

Regardless of whether a single CV or multiple CVs are selected via the UI, the MItSelectionList iterator will only return one selection item. If multiple CVs were selected, how can I find which ones?

If you select a multiple CVs, and then use the MItSelectionList iterator class of the API, all the selected CVs will be returned in a single component.

To access the individual CVs you must use the getDagPath method of MItSelectionList, which returns both an MDagPath and a MObject, then pass these as arguments to the constructor of an iterator. For NURBS surfaces, the MItSurfaceCV class would be used to extract the individual surface CVs. The iterators: MItCurveCV, MItMeshVertex, MItMeshEdge and MItMeshPolygon can be used to perform similar operations on NURBS curve, and the various components of polygonal objects. The lassoTool plug-in provides a good example of how this is done.

What is the meaning of the value that MItSurfaceCV::index() returns?

One of the components of a NURBS surface is an array of CVs. The index method returns the position of the given CV in the array maintained by the surface. The UI represents this as a 2D array of CVs with rows and columns of CVs corresponding to U and V indices. Internally this is stored as a 1D array (row1, row2, row3, etc.) and index returns the position of the CV in this data structure. (Incidentally, if you created the surface via MFnNurbsSurface::create, this is the way you had to provide the CV array). You can convert this to a pair of 2D indices via:

sizeInV = MFnNurbsSurfaceInstance.numCVsInV();

indexU = index() / sizeInV;

indexV = index() % sizeInV;

The method getIndex of the MItSurfaceCV class returns the indexU and indexV values using exactly this calculation

How can I compare two components of a object to see if they are the same? Specifically, I need to compare two CVs on a NURBS surface, but this problem appears to apply to all types components of both NURBS and polygonal objects.

There is no simple mechanism for doing this. Components are identical if the are members of the same Dag path (the MDagPath class defines an == operator to perform this comparison), and if their indices, returned by the index methods of the various component iterators, are also the same.

I created an instance of an MFnNurbsSurface function set, and got good data out of it, however, I then called MGlobal::viewFrame, to move the animation to another frame. I know the surface moved, but I got the same data out of the function set as I did the first time. How can I make this work?

You will have to restructure your code a little to make this work.

After a call to MGlobal::viewFrame, is it necessary to rebind your function sets to the objects they are accessing. This can be handled by code that looks like the following:

MDagPath path;

// initialize path somehow to refer to the object in question

MFnNurbsSurface surf;

for (int i = 1; i <= maxFrames; ++i) {MGlobal::viewFrame(i);

surf.setObject(path);

}

The MDagPath will remain valid across frames, and thus can be used to rebind the function set to the object in each frame.

We would like to have full documentation on all the transformations on a CV as it is positioned in world space. For example, CVs end up in their global position via a number of matrices, clusters, functions, animations etc. Documentation of these transforms explicitly and exactly would be very useful.

We believe that the set of possible transforms is both too complex and too dynamic to document in the general case. Take clusters for example. Clusters in Maya are implemented as deformers. This means that a deformer is put between the original surface and the new output surface.

It is therefore possible to get the world transformation information from the deformed CV up to the world through the DAG shape that holds the deformation result. So, it is trivial to query the world space location of a point. We get that for free from our current implementation.

However, the local to world transformation of any point is arbitrarily complex. Nodes can easily implement procedural transformations that don’t involve matrices at all. Conceptually, the architecture is one in which a point in local space is passed through a series of “black boxes” each of which affect its position and we simply don’t know what is in all of the boxes.

This implies that we can’t set the position of a point (or CV) exactly in world space either. To do so would require computing the inverse of the local to world space transformation, and I have just been busy telling you we don’t know how to define that transform in general.

That being said, if you are just interested in the order in which Maya’s transform node applies scale, rotation, translation and other transformations from its attributes to an object, this is described in detail in the documentation for the transform node in the Commands online documentation in xform.html.

Can we derive our own custom classes/nodes from the standard classes/nodes such that they will be correctly processed in the DG? This capability has been inferred to in the past and we just want to get the most recent confirmation on this capability.

Maya maintains ownership of the MObjects which it presents as opaque data. So it is not possible to derive from these objects.

Additionally it isn’t possible to derive from Maya’s internal nodes. For example, let’s say you wanted to derive something from the internal revolve node. The revolve node, like all nodes, is simply a compute function on a set of attributes. To make modifications to this node you would require the source code to the compute method - which we can’t give you. Instead what can be done is to connect new (user-written) nodes (see the next paragraph) to the attributes of the Maya revolve node and use these new nodes to modify the input and output of that node. Alternatively, in this case, a new revolve node could be written and used in place of the system defined one.

Maya Proxy objects are designed expressly for derivation, and allow new user nodes to be added to Maya.

As well, it is possible to derive from the function sets to create new operations on the MObjects (limited only by the fact that the MObjects are opaque, and the source for the implementation of the function set members is unavailable).

So, in summary, you can’t derive directly from Maya nodes, but you can create your own nodes, insert them into the dependency graph and have them either replace an existing Maya node, or modify the input or output parameters of Maya nodes.

It looks like the user-defined nodes are fundamentally different from the already existing nodes. If you look at something like MFnNurbsCurve, you see that eventually, it is derived from MFnBase, but if you look in the circle example, you see that the “circle” is derived from MPxNode.

There is a fundamental difference between “function sets” and “maya objects”. Maya internal objects (which include dependency nodes) are encapsulated in MObjects and function sets, which are indeed derived from MFnBase, are initialized “with” an MObject and then act upon it. This is sort of an “outward > in” kind of paradigm in which user written code is allowed to affect the internals of Maya objects.

To create a user-defined dependency node, we have to do something completely different, which is why the MPxNode classes are necessary. Effectively, what we do is create a new internal Maya node, and “hook up” its methods to the ones defined in the customer generated node. For example, if during the evaluation of the dependency graph it is necessary to “recompute” a user defined node, what happens is:

This is repeated for any of the attributes of the node that require recomputation. This is sort of an “inward > out” kind of paradigm in which internal Maya objects have to call a user written compute function.

So, yes there are fundamental differences, but that is intentional and caused by the fact that the problems are fundamentally different.

It is also unclear if we can accomplish a “persistent” effect through the API. That is, if the CV gets altered, the arclen will change. So anything that is attached to our arclen attribute would need to be moved or sized accordingly.

As long as the propagation of values is done through connections in the dependency graph, this is taken care of automatically. For example:

MayaNodeA.cvSet > customerNode.input > CustomerNode.output(computes scale from arclen) > MayaNodeB.scale

A change in a CV in MayaNodeA automatically forces a recompute in CustomerNode and MayaNodeB, and the object is moved or sized accordingly.

We want to be able to drive an attribute of one object by a derivable value of another object. For example, we may want to drive the scale of one object by the arclen of a curve. Or we may want to translate an object according to the evaluated value of a curve at a particular parametric value. The examples show how to instantiate a dependency node that allows us to tie together attributes of objects, but can we take it one step further and have the driving value be an evaluated value, e.g. myNurbsCurve.arclen() or myNurbsCurve.point(0.5)?

Additionally when creating your own node, can you create an input attribute that takes a node or MObject as its input, rather than a float or a string? This would allow us to jump back into the MFnNurbsCurve and use whatever derived methods we want.

Such a construct is fairly easily handed in the Maya architecture by writing a node that has an MFnTypedAttribute. One parameter of the declaration of such an attribute is the “type” it accepts as input. It is quite possible to specify it as taking a nurbsCurve by using the type “kNurbsCurve”. Since your node will then have the actual curve, you can compute anything you want based upon it.

So the node I think you want to write would have a nurbsCurve input attribute, and three double (scale) output parameters. All you need to do is connect this node to a node that produces a curve in the input, connect its outputs to the scale inputs of the node you want to animate, and the dependency graph will do the rest. Any change in the curve node will automatically propagate through the graph and update the scale of the final object. The example plug-in called arcLenNode demonstrates how to do this.

Furthermore, inside your node, you will really have a nurbsCurve object, and thus you can attach a MFnNurbsCurve function set to that object. Then you can use any of its methods that compute values you need. The “length” function will compute the arclen of the curve, and the “pointAtParm” method will return a point at a particular parameter value.

As well, a similar result can be obtained by simply hooking together existing dependency nodes. For example, the subCurve and curveInfo nodes allow you compute the arclen of a subcurve as shown below:

global float $arclen;

// Create a curve

curve -p -5 0 8 -p -9 0 2 -p -3 0 5 -p -6 0 -2

-p 1 0 3 -p -4 0 -5 -p 4 0 1;

// Create a node to extract part of a curve,

// set the parts to keep, and attach it to

// the curve created above.

createNode -n subCurve1 subCurve;

setAttr subCurve1.minValue 0.4;

setAttr subCurve1.maxValue 1.4;

connectAttr curveShape1.local subCurve1.inputCurve;

// Create a curveInfo node, and connect

// it to the output of the subcurve.

createNode -n curveInfo1 curveInfo;

connectAttr subCurve1.outputCurve curveInfo1.inputCurve;

// Get the arclen of the subcurve from the

// curveInfo node and print it.

$arclen =`getAttr curveInfo1.arcLength`;

print(“curve[0.4:1.4] has arclen “ + $arclen + “\n”);

// Change the part of the curve extracted by the

// subCurve node and print the new arclen

setAttr subCurve1.minValue 0.0;

setAttr subCurve1.maxValue 5.0;

$arclen =`getAttr curveInfo1.arcLength`;

print(“curve[0.0:5.0] has arclen “ + $arclen + “\n”);

I need a better understanding of the differences between DagNodes and Dependency Nodes and how these relate to the API object classes. In particular, how do I know when to use the getDagPath method from the MItSelectionList iterator, and when do I use getDependNode?

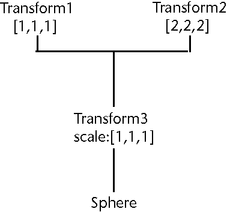

A DAG nodes describe how an instance of an object is constructed from a piece of geometry. For example, when you create a sphere, you create both a geometry node (the sphere itself) and a Transform Node that allows you to specify where the sphere is located, its scaling, etc. It is quite possible to have multiple transform nodes attached to the same piece of geometry. For example:

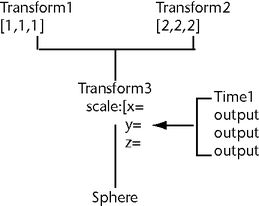

The dependency graph, however, is something new. All DAG nodes are also dependency nodes, but not vice-versa. For example there is a “time1” dependency node that can produce the frame number of the current animation. The “circleNode” and “sineNode” types created by the “circle.cc” and “sine.cc” plug-in examples are dependency nodes, however, they are not part of the DAG. Instead dependency nodes can be wired together to provide a dynamic evaluation graph that can end up affecting DAG nodes (and thus affecting what is drawn). For example,

In this example the x, y, and z scale parameters of Transform3 are driven by the frame number. Thus as the animation is run, the 2 instances of the sphere will grow.

So, now from the API, how do you know when to used getDagPath and when to use getDependNode?

Well, if you pick something on the screen with the mouse then you will always be picking an instance and thus you will always have a DAG node available, and you should use getDagPath.

If you pick something by name, then you might or might not get a DAG node. The right thing to do in this case is ask. The MItSelectionList iterator’s “itemType” method will return an element of an enum that will differentiate between the two node types, you can then call getDagPath or getDependNode as appropriate.

Yet another important thing to understand in Maya is that geometry DAG nodes do not have transformation matrices. They rely on the transform nodes above them for their transformation information. Because of this, selection of geometry in 3D views always causes the transform node above the geometry be selected rather than the actual geometry node. This allows all of the transformation tools to work properly.

So, in the above diagram, clicking on Sphere1 in a 3D view will cause Transform2 to be selected.

For instance, if you are iterating through the selection list looking for the sphere and you want to perform an operation upon the sphere’s CVs, in the iteration, you will eventually come to Transform2.

If you get Transform2 as a dependency node (via getDependNode), then you have a transform node. From this transform node, you will not be able to find either the sphere or its CVs. Additionally, if your object is instanced (as in the first diagram), then you will have lost the information about which instance was selected.

If you get Transform2 as a DAG node, you will get a DAG path object. The DAG path object is more intelligent. It knows where the transform resides in the DAG. If you give the DAG path to the NURBS surface function set (or the iterator), then the sphere node under the transform will be found automatically and the CVs will be available for modification.

I can’t figure out how to get the transform matrix of an object. I have played around with attaching the MFnTransform function set to dependency node and dag paths, but can’t quite seem to get it right.

You were close. To accomplish this you must first get a DAG path structure for the object, then attach the MFnTransform function set to it. You can use that to get a MTransformationMatrix object, which can access and update the transform for the object in numerous ways, including returning the entire matrix. To solve your problem you would include code like:

MDagPath mdagPath;

MStatus status;

MTransformationMatrix transform;

MMatrix matrix;

if ( mdagPath.hasFn(MFn::kTransform) ) {// Get the transform matrix via the Dag path.

MFnTransform transformNode(mdagPath,&status);

// Get the transform matrix via the function set

transform = transformNode.transformation(&status);

matrix = transform.asMatrix();

...

}

How can I create a revolve-like plug-in, and have it take a curve, create a surface from it, and when the curve is modified, regenerate the surface?

In order to implement this “history” functionality, you must write your own “revolve” node. It will take the curve as input (using the MFnTypedAttribute - see the arcLenNode plug-in) and output a surface. The output attribute of this node should then be connected to the create attribute of a nurbsSurface node which will draw it. In MEL the typical way to hook this up would be:

createNode transform -n revolvedSurface1;

createNode nurbsSurface -n revolvedSurfaceShape1 -p revolvedSurface1;

createNode yourRevolveNode -n yourRevolveNode1;

connectAttr yourRevolveNode1.outputSurface revolvedSurfaceShape1.create

The simpleLoftNode example plug-in and provides a good example of how to do this.

If I set up a deformation in Maya, and then traverse the DAG tree from a plug-in, under the transform node for the deformed surface I see two shape nodes, both of which can be interpreted as MFnNurbsSurface - one is the deformed shape, and one is the shape in some neutral position. I need a flag to indicate which is the neutral position, so I know that it’s not really there, and don’t need to operate on it.

The 2 surfaces are differentiated by their boolean intermediateObject attributes. If value of the attribute is TRUE, then this node is the input surface for the deformation and can be ignored.

You can check the value of this attribute by creating a plug for this attribute on for each of the two nodes, and then get the value of the attribute from the plug. Alternatively, the convenience routine isIntermediateObject in the MFnDagNode function set performs this operation.

How can one used Maya multi attributes to implement an array of a user defined data type?

This is not how you implement arrays of a user defined data type. When dealing with user defined data, Maya doesn’t know or care what the data looks like. As you point out, it is tempting to create one data type and then attempt to create multiple instances of it via multi-plugs, but multi-plugs were designed for multiple connections in the DG rather than data storage, so this won’t work.

Instead, to implement an array of data, one must create the array inside a user defined data type and use the method outlined in the blindComplexDataCmd example to access that array. For example,

class blindComplexData : class MPxData {public:

// override methods like readBinary()....

// define any data you want, for example, an array of integers

int a[12];

};

After adding the above user-defined data as a dynamic attribute, access the data by attaching a plug to it the usual way and do a getValue() to get a handle to the data. Once you have the handle, convert it to a pointer to an instance of your custom data type.

Given a NURBS surface that is implemented via a cluster, moving the CVs of this surface via the MFnNurbsSurface function set seems to have no effect. Why is this, and how can this be done?

If clusters are present, then you have a deformer network which is computing the shape of the surface that appears in the Dag. If you move a CV on that “final & visible” surface, the deformer will simply move it back since the deformer is creating that surface. There are two ways to cope with this. First, find the “intermediate object” that is the input surface to the deformer and move the CV there. The problem with this approach is that it is difficult to predict what effect moving an input CV will have on its position on the output surface.

The other approach is via tweaks which provide the capability to move (or tweak) a CV after a deformer has determined its position. There is no specific API in MFnNurbsSurface for handling tweaks (and there won’t be for Maya 1.0) but you can create a tweak by directly modifying the attributes of the nurbsSurface dependency node. To do a tweak you must:

If the boolean attribute “relativeTweak” is true, the values in the CV array are used to move the corresponding CV relative to the position in which the deformer puts it, otherwise they are absolute positions of the CVs.

Can a plug-in open its own graphics window (via winopen(), for example) and do graphics without interfering with the rest of Maya? If so, should it use OpenGL? How does it handle events (such as mouse movements within its own window) and still work with the rest of Maya? Does Maya provide an event handling mechanism that the plug-in can use and be compatible with the rest of Maya?

Yes. In fact the helixMotifCmd example plug-in (not available on Windows) shows how to open a Motif window. You can also use OpenGL in such a window.There is one restriction however, Maya must retain control of the event loop. This should not be a problem however as your window simply needs to register callbacks for its UI elements, and Maya will happily invoke them for you.

A question for you however is “why do you really want to do this?” As there are several other ways to create windows that you might prefer.

First of all, the MEL scripting language in Maya allows you to create new windows and access virtually all Maya features. If you need a window for a dialog box, doing this in MEL is both easy and far and away the most efficient method of implementing such a feature.

Maya also contains a new class called MPxLocatorNode that allows you to create DAG objects and provide a draw routine for them implemented with OpenGL calls. These objects are called locators because they do not render. So, you can use them for screen feedback, but not to create renderable objects. The example plug-ins footPrintNode and cvColorNode provide examples of how to create and use locators.

As for input events, we currently supply an API class called MPxContext that allows you to handle such events. The example plug-ins marqueeTool, helixTool and lassoTool all demonstrate how to implement this.

How does one tell when both left and mid buttons are pressed at the same time? Right now only one is reported (I think).

When you press a second button it does not generate an event but instead it is stuffed into the modifier for the hold event for the first button. For example, say the user presses the left mouse button. To see if the user has pressed the middle mouse button while the left is still down, check the modifier for the doHold event using MEvent::isModifierMiddleMouseButton().

How can I tell which 3D window is active? I thought of using the camera name to do this, but this will fail if the user changes the camera name. I need a function returning which window the 3dview is (XY, XZ, YZ, pers).

This is a little difficult. Because any view can be arbitrarily tumbled or changed into a perspective view, a general solution to this problem requires a bit of work.

M3dView::active3dView will give you the active view from which you can get the camera. You can then use MFnCamera methods upDirection and rightDirection to get the respective vectors, and compare them against MVector::xAxis, MVector::xNegAxis, etc. in order to determine the view that the user is seeing.

The viewToWorld method in M3dView correctly maps 2D coordinates to 3D coordinates in orthographic windows, but returns bogus value in its cursor argument in the perspective window.

For the perspective view, you must use the version of viewToWorld which returns points on both the near and far clipping planes given a point in the 2D view. Any point on the line segment connecting those points is a valid solution to the mapping, and you will have to determine on your own which of these points you wish to use.

Animation “created” in the DG via a user-defined node does not show up in the animation curve graphs, i.e. there is no way to see the results of procedurally generated animation.

Only animation generated via keyframe animation shows up in the animation curve graphs.

Unfortunately, this is unlikely to change. For a keyframed animation, we need only to check whether the node connected to an attribute is an animCurve node, if so, it is quite simple to extract the keyframed attribute values for display in the graph.

For any other type of animation, we only know if it is an animation if somewhere in the graph connected to a particular attribute we find a time node.

For a node which performs a procedural animation, we would actually have to run the entire animation, and save the output attribute values at each frame for display. This has the potential to be extremely computationally intensive. As well, the resulting curves would not be editable as they would be displaying only output values with no access to the knowledge on how they are computed.

I need in some way to be able to query any data at any animation frame. In OM/OA I do this by doing a viewFrame(x) and then checking the data. This is very slow however, and really what I might want to know is the position of this particular node at time x. In OM if you do a viewframe on a sub node it usually returns an incorrect value depending on how the animation has been set up.

This should be better in Maya as the dependency graph will make sure that the “subnode” kind of information is always accurate.

You can query the data you are interested in a manner quite similar to that in OM/OA: perform a viewframe, to set the time, then query the attributes (like tx, ty, tz, etc.) of the node you are interested in via the getValue methods of the MPlug class. As you point out however, this is somewhat slow, since viewframe changes the global time.

In the Maya API you have another option however. You can create an instance of the MDGContext class initialized to the time you are interested in. This can be passed to the getValue method of the MPlug class and the attribute you are interested in will be evaluated at the specified time. As much or as little of the dependencies as necessary will be reevaluated in order to ensure you get an accurate answer. Lots of dependencies on other nodes will make the evaluation slow. Otherwise it should be fast.

You should also be aware that Maya does not maintain the state of animated objects at all possible keyframes so the only way to determine an object’s animation state at any particular time is by querying it at a particular time using one of the two methods described above.

Can time be set randomly with MGlobal::viewFrame()? Are particles and IK stuff properly updated in all cases?

You can set the time randomly, and everything will always be updated properly. However, the underlying mechanism is highly optimized towards monotonically increasing time. You can incur a large performance penalty when jumping time around randomly.

The method by which MFnMotionPath does its movement is unclear. In particular, how it interacts with the DAG tree. Suppose you have a Transform parenting a child shape. You can set keyframes and animate the translate channels and they turn green. Or you can do MotionPath and it doesn’t affect translate channels.

Note that if you do have both MotionPath and translate, the MotionPath overrides the translate, and translate is ignored.

What is the mechanism by which MotionPath affects the movement?

Can you read the value at any given time? How?

If I’m traversing a DAG tree, how can I tell an object’s position?

Keyframes are provided by anim curve dependency nodes, and similarly, motion paths are implemented as dependency nodes. These nodes function because they are connected to the transformation attributes of the parent transform in the DAG. When the transform needs a value, it gets it from the anim curve or motion path node.

So, when you connect the motion path node, the anim curve node gets disconnected, and so no longer affects the transform. You can only have one of these positional nodes connected to a transform at a time, and the last one connected wins.

Regardless of whether or not the transform is connect to an anim curve or motion path node, you can always ask the transform node for its transformation information and get the right values.

I haven't been able to find any documentation or sample code describing the interpolation system used by Maya for the quaternion curves. Could you tell me where I can find the details on it?

For the spline interpolation, we use squad which is described in the first two references below.

How do I add Windows-specific code to my plug-in?

Use #ifdef _WIN32 around the Windows-specific code.

Select Project > Settings, then select the Debug tab. In the Executable for debug session field, type the full pathname to the Maya executable, for example:

C:\Program Files\Autodesk\Maya2010\bin\Maya.exe

Use the F9 function key to toggle breakpoints in your plug-in source code. When you are ready to begin debugging, select Build > Start Debug > Go.

How do I get the handle to the application instance (the HINSTANCE) for my plug-in?

We have saved the HINSTANCE for the plug-in in a global variable, MhInstPlugin, which should be available if you have included the standard set of plug-in include files. Specifically, the variable is defined in the MfnPlugin.h include file.

What do I do if I get the following warning?

warning C4190: ’initializePlugin’ has C-linkage specified, but returns UDT ’MStatus’ which is incompatible with C

Nothing. The compiler will complain about this, but it will do the right thing. The warning is harmless.

Why do I get compiler errors when I use the variables “near” and “far”?

These are reserved keywords in the Microsoft compiler. You will need to change the variable names to, for example, nearClip and farClip.

What do I do if I get the following error?

error C2065: ’uint’ : undeclared identifier

The Windows equivalent for this is UINT. We have added a define to MTypes.h to solve this problem.

What do I do if I get the following error?

error C2065: ’alloca’ : undeclared identifier

Add the following to your source code.

#ifdef _WIN32

#include "malloc.h"

#endif